“Next, next, next…done.”

That’s still the status quo in security awareness training—even though threats have evolved, tech stacks have changed, and the way people work (and learn) looks nothing like it did a decade ago.

But what if the next evolution isn’t just better security awareness content or shorter modules—what if it’s personalized coaching powered by artificial intelligence cybersecurity tools that understand context, behavior, and timing?

We’ve seen this idea recently floated in industry circles: a near-future where each employee has an agentic assistant—one that adapts to their preferences, risk profile, and behavior—and walks them through security guidance as they work.

On demand. In context. With reporting that reflects what they actually learned, not just what they clicked.

So, is this the future? And if it is, who’s already paving the way?

Why the traditional training model breaks down

We’ve worked with security leaders across industries, most of which echo the same frustrations:

“Our workforce spans generations and tech skill levels.”

“We’ve modernized our infrastructure, but training feels stuck in 2015.”

“Our most effective campaign? Posters in bathroom stalls.”

These complaints reflect a deeper tension at the heart of corporate training: the training methods haven’t kept up with the people they’re supposed to protect.

Traditional security awareness relies on static content delivered at fixed intervals. It assumes everyone needs the same training, in the same format, at the same time—regardless of their role, their risk level, or how people learn about security.

Over time, this approach turns into disengagement. Fact is, people are tired of “check-the-box” training.

Over 71% of employees admit to taking risky actions, and 96% of them knew better. That’s not a knowledge gap, it’s a context one.

As security professionals, we’re not losing to ignorance. We’re losing to irrelevance.

Training fails when it doesn’t show up at the right time, in the right place, or in a format people can act on. Which is exactly where the idea of agentic, AI-driven coaching hits a nerve.

AI security awareness: training that knows and adapts to you

The next evolution of cybersecurity learning is agentic: AI-powered assistants embedded in daily workstreams that adapt not just to what people need to know, but how they’re best supported in learning it.

These agentic systems reflect the growing trend of AI in information security—moving from passive education to security awareness solutions that support just-in-time learning and AI risk management.

These systems understand:

Preferences: Do I learn better with text, video, or voice?

Behavioral risk patterns: What mistakes do I tend to make? Where have I improved?

Job responsibilities: What types of data or systems do I interact with daily?

It’s security awareness that isn’t just personalized in name, but genuinely personal in function. Take a look:

Finance Associate

- What they’re dealing with

-

Approving a new vendor for the first time

- AI-powered awareness action

-

Delivers a quick walkthrough on invoice fraud specific to their role and task

Marketing lead

- What they’re dealing with

-

Ignored multiple browser security warnings during campaign work

- AI-powered awareness action

-

Sends a timely, non-punitive phishing awareness nudge

Plant technician

- What they’re dealing with

-

Needs to learn a new machine; has dyslexia and prefers visuals

- AI-powered awareness action

-

Provides a short, voice-narrated video in dyslexia-friendly format tailored to their role

This is what happens when awareness becomes contextual, meeting people at the intersection of risk, role, and readiness.

AI security training like this doesn’t interrupt, it empowers. It doesn’t push policy, but provides support. And that changes the relationship between people and security from adversarial to aligned.

This vision may feel futuristic, but it’s grounded in everything we already know works:

Behavior change comes from context, not coercion

Real-time coaching has greater staying power than annual refreshers

Tailored content drives down real risk more effectively than blanket training

Metrics matter, but only when they reflect real improvement, not just compliance clicks

Could an AI assistant pass an audit?

Yes, if done right.

When asked whether an agentic model could replace traditional e-learning, one ISO27001 auditor gave a clear answer: if it’s role-based, documented, and demonstrably effective, it counts.

That means you should be able to:

Prove that people engaged with the material

Show that it was relevant to their job

Demonstrate that their behavior changed as a result

It’s not just valid—it’s arguably more audit-ready than traditional training, because it’s tailored, tracked, and responsive to real risk.

In fact, this kind of role-specific, behavior-aware training aligns well with evolving standards around AI compliance in cybersecurity, which increasingly call for transparency, accountability, and provable outcomes.

So what’s missing?

The ingredients for this future already exist, just not all in one place. They’re scattered across smart tools, automated systems, and creative teams that know what it takes to connect awareness to action.

This is the promise of AI security awareness: a smarter, more human-centered model that combines machine learning security with real-time behavioral insight.

What’s needed now is integration: bringing identity, risk posture, role data, and behavioral signals into a single, intelligent loop. And that’s where security awareness platforms like Secure Practice already shine.

How the Secure Practice approach fits into the future

At Secure Practice, our mission has always been to make security more relatable, by understanding behavior, reducing friction, and helping people feel capable, not just compliant.

It’s why we’re constantly thinking about how to support safer habits in ways that genuinely work for people—with or without AI.

Our goals are human, not just technical, so we’re not building AI assistants. But we are building the systems those assistants would rely on.

By using the Secure Practice platform to deliver security training, you get the inputs that an agentic assistant would need, and the outputs that auditors, security teams, and stakeholders already expect:

1. Role-based training journeys

Secure Practice helps you build awareness programs that reflect how people actually work. Whether someone is in finance, customer support, or dev ops, their risk profile (and the training they need) will differ.

We let you tailor journeys to roles, departments, seniority levels, and even regulatory exposure. In turn, you deliver content that feels intuitive, not generic.

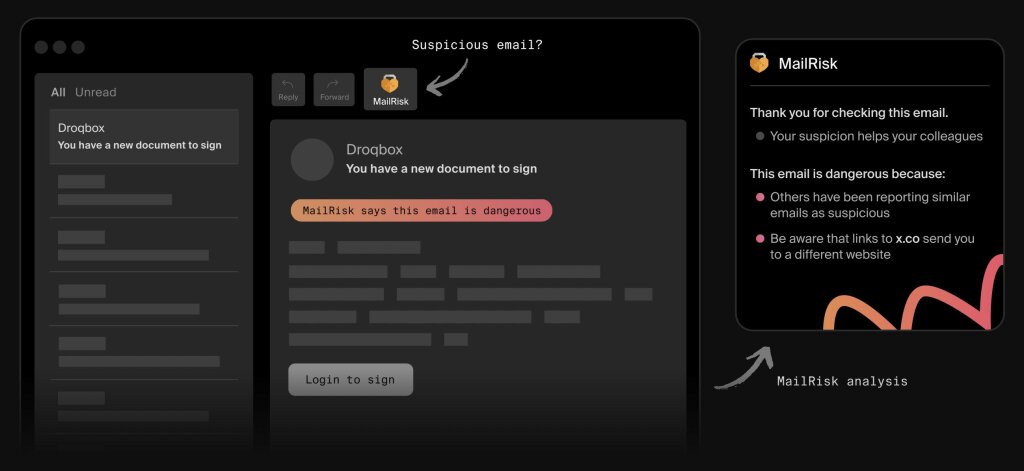

2. Behavior-linked phishing simulations

Not all simulations are created equal. Ours are tied to behavior patterns, not just calendar cycles.

If someone recently fell for a suspicious invoice, their next simulation will reflect that threat vector. If someone always reports suspicious emails, we reinforce that behavior with targeted praise or higher challenge scenarios.

This creates a dynamic feedback loop—one any AI security training tool would depend on to stay adaptive and aligned to personal risk. We’re already generating that data.

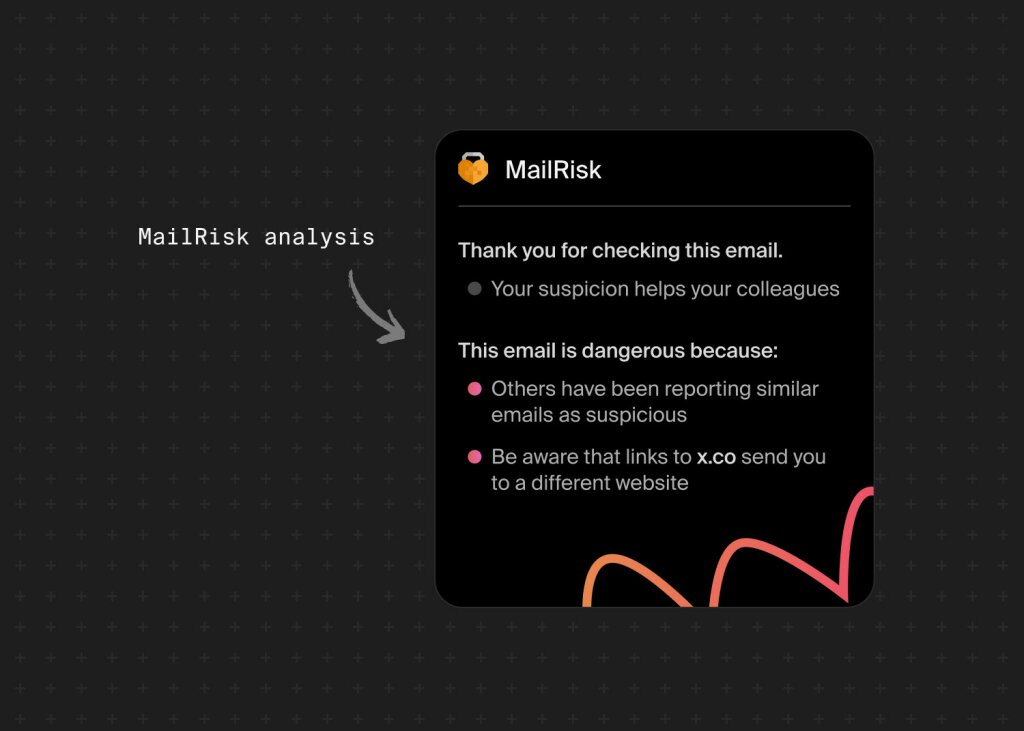

3. Real-time coaching nudges

In traditional training, the delay between mistake and lesson can be weeks—or months. In Secure Practice, learning happens as close to the behavior as possible.

We deliver contextual security nudges through SMS, Teams, or email, using tone and timing that match your company culture.

It’s supportive, not spammy. And it’s exactly how an agentic assistant would intervene: quietly, quickly, and constructively.

4. Risk dashboards that track actions, not just attendance

Compliance platforms show you who completed training. Secure Practice shows you where you’ve improved, what’s stayed risky, and where to focus next.

These are the exact insights an AI in cyber risk assessment would need to prioritize who gets coached, when, and how.

Our human risk metrics dashboards combine simulation performance, report frequency, learning engagement, and qualitative feedback. Together they create a clear, role-based record of training activity—not just a list of who clicked a link, but a timeline of meaningful interactions.

This makes audit prep easier, but more importantly, it helps you focus your efforts where they matter most.

See what behaviors changed over time, not just what boxes were ticked

Anyone can log training completions. What matters more is:

Did colleagues stop clicking on real phishing links after a specific module?

Have teams started reporting suspicious emails more frequently?

Did a high-risk department become more vigilant over the last quarter?

Secure Practice combines behavioral signals (from phishing simulations, reporting tools, and risk indicators) with training data to give you a true picture of behavior change, proving impact, not just compliance.

You can show stakeholders that your awareness program isn’t just active—it’s working.

Start moving towards smarter security awareness

We’re helping organizations train like the future is already here—because in many ways, it is.

But you don’t need a fully autonomous AI assistant to start acting like you have one. Start with these shifts:

Identify where human risk is most concentrated in your organization

Look beyond job titles. Use behavioral data like phishing simulation results, reporting habits, or known weak spots to understand who needs what kind of support, and when.Align learning formats and delivery methods with how people prefer to engage

Some people want a quick read. Others need visuals, or even real-time coaching. Use security content tools that let you flex delivery without compromising the message.Focus on timing and relevance, not just repetition

One-size-fits-all training delivered every October won’t drive change. The key is reaching people at moments that matter, with content that speaks directly to their context.Choose tools that connect training to behavior, not just policy

Don’t settle for checkboxes. Use security awareness software that helps you understand whether people are doing the right thing, not just reading the right thing.

AI security training may not be mainstream yet, but the foundations are strong.

So maybe the question isn’t “Who will build it first?”

Maybe it’s “How do we connect what we already have in smarter ways?”

Smart security awareness starts with small, strategic shifts

Secure Practice gives you the tools to personalize, prioritize, and improve security culture.